CS11 - Understanding Data Collection with APIs

A hands-on guide to collecting structured data with Python and real-world APIs

In today’s world, knowing how to collect clean, relevant, and timely data is essential for any data professional. While there are many ways to gather data, one of the most reliable and scalable methods is through APIs (Application Programming Interfaces).

Cheatsheet & Code in the end ‼️

In this issue, we’ll break down the essentials of using APIs for data collection, from how they work to how you can start using them in Python, complete with a practical example using Eurostat, the EU’s official statistics portal.

Let’s dive in! 👇🏻

🤔 What’s an API, and Why Should You Care?

An API (Application Programming Interface) is a set of rules that lets two software systems communicate.

Imagine a restaurant 🧑🏻🍳

You don’t go into the kitchen to prepare your food—you place your order with the waiter, who passes your request to the chef and brings your food back.

Similarly, an API receives your request, fetches the data from a source, and returns it in a structured format (usually JSON or XML).

This makes it easy to integrate external data into your apps or analyses.

Anatomy of an API Call 🔧

Every API interaction typically includes:

Client: The software requesting the data (e.g. your Python script)

Request: The structure of what you’re asking for

Request: Send extra info like API keys.

The API Server: The system that responds to your request

The API Endpoint: URL to access specific data or actions.

Response: The result you get, often in a machine-readable format

This communication allows applications to share information or functionalities efficiently, enabling tasks like fetching data from a database or interacting with third-party services.

Why Use APIs for Data Collection?

APIs offer several advantages for data collection:

Efficiency: They provide direct access to data, eliminating the need for manual data gathering.

Real-time Access: APIs often deliver up-to-date information, which is essential for time-sensitive analyses.

Automation: They enable automated data retrieval processes, reducing human intervention and potential errors.

Scalability: APIs can handle large volumes of requests, making them suitable for extensive data collection tasks.

Implementing API Calls in Python

Making an introductory API call in Python is one of the easiest and most practical exercises to get started with data collection. The popular requests library makes it simple to send HTTP requests and handle responses.

A simple API call request would look as follows 👇🏻

import requests

# Define the API endpoint

url = "https://api.example.com/data"

# Optional headers (e.g., for authentication)

headers = {

"Authorization": "Bearer YOUR_API_KEY",

"Content-Type": "application/json"

}

# Optional parameters or payload

params = {

"query": "example",

"limit": 10

}

# Make the GET request

response = requests.get(url, headers=headers, params=params)

# Print the response

if response.status_code == 200:

print("Success:", response.json())

else:

print("Error:", response.status_code, response.text)Example 1: Using random user API

To demonstrate how it works, we'll use the Random User Generator API, a free service that provides dummy user data in JSON format, perfect for testing and learning.

Here’s a step-by-step guide to making your first API call in Python.

STEP 1 - Install the Requests Library:

pip install requestsSTEP 2 - Import the Library:

import requests

import pandas as pdSTEP 3 - Check the documentation page

Before making any requests, it's important to understand how the API works. This includes reviewing available endpoints, parameters, and response structure. Start by visiting the Random User API documentation.

STEP 4 - Define the API Endpoint and Parameters:

Based on the documentation, we can construct a simple request. In this example, we fetch user data limited to users from the United States:

url = 'https://randomuser.me/api/'

params = {'nat': 'us'}STEP 5 - Make the GET Request:

Use the requests.get() function with the URL and parameters:

response = requests.get(url, params=params)STEP 6 - Handle the Response:

Check whether the request was successful, then process the data:

if response.status_code == 200:

data = response.json()

# Process the data as needed

else:

print(f"Error: {response.status_code}")STEP 7 - Convert our data into a dataframe

To work with the data easily, we can convert it into a pandas DataFrame:

if response.status_code == 200:

data = response.json()

# Process the data as needed

else:

print(f"Error: {response.status_code}")Now, let’s exemplify it with a real case.

Example 2: Working with Eurostats API

Eurostat is the statistical office of the European Union. It provides high-quality, harmonized statistics on a wide range of topics such as economics, demographics, environment, industry, and tourism—covering all EU member states.

Through its API, Eurostat offers public access to a vast collection of datasets in machine-readable formats, making it a valuable resource for data professionals, researchers, and developers interested in analyzing European-level data.

STEP 0 - Understand the data contained in the API

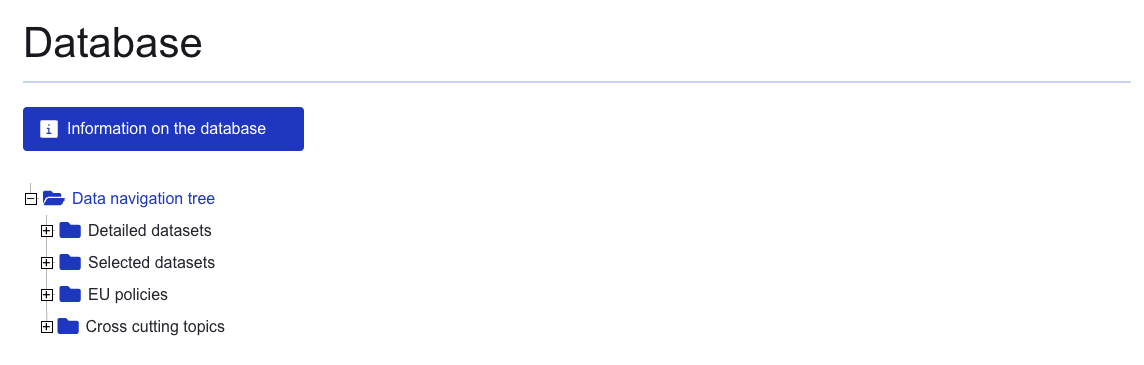

If you go check the Data section of EUROSTATS, you will find a navigation tree as follows.

We can try to identify some data of interest in the following subsections:

Detailed Datasets: Full Eurostat data in multi-dimensional format.

Selected Datasets: Simplified datasets with fewer indicators, in 2–3 dimensions.

EU Policies: Data grouped by specific EU policy areas.

Cross-cutting: Thematic data compiled from multiple sources.

STEP 1 - Checking its documentation

Always start with the documentation. You can find Eurostat’s API guide [here]((https://wikis.ec.europa.eu/display/EUROSTATHELP/API+-+Getting+started+with+statistics+API). It explains the API structure, available endpoints, and how to form valid requests.

STEP 2 - Generating the first call request

To generate an API request using Python, the first step is installing and importing the requests library. Remember, we already installed it in the previous simple example. Then, we can easily generate a call request using a demo dataset from the EUROSTATS documentation.

# We import the requests library

import requests

# Define the URL endpoint -> We use the demo URL in the EUROSTATS API documentation.

url = "https://ec.europa.eu/eurostat/api/dissemination/statistics/1.0/data/DEMO_R_D3DENS?lang=EN"

# Make the GET request

response = requests.get(url)

# Print the status code and response data

print(f"Status Code: {response.status_code}")

print(response.json()) # Print the JSON responsePro tip: We can split the URL into the base URL and parameters, to make it easier to understant what data are we requesting from the API.

# We import the requests library

import requests

# Define the URL endpoint -> We use the demo URL in the EUROSTATS API documentation.

url = "https://ec.europa.eu/eurostat/api/dissemination/statistics/1.0/data/DEMO_R_D3DENS"

# Define the parameters -> We define the parameters to add in the URL.

params = {

'lang': 'EN' # Specify the language as English

}

# Make the GET request

response = requests.get(url, params=params)

# Print the status code and response data

print(f"Status Code: {response.status_code}")

print(response.json()) # Print the JSON responseSTEP 3 - Determining what dataset to call

Instead of using the demo dataset, you can select any dataset from the Eurostat database. For example, let's query the dataset TOUR_OCC_ARN2, which contains tourism accommodation data.

# We import the requests library

import requests

# Define the URL endpoint -> We use the demo URL in the EUROSTATS API documentation.

base_url = "https://ec.europa.eu/eurostat/api/dissemination/statistics/1.0/data/"

dataset = "TOUR_OCC_ARN2"

url = base_url + dataset

# Define the parameters -> We define the parameters to add in the URL.

params = {

'lang': 'EN' # Specify the language as English

}

# Make the GET request -> we generate the request and obtain the response

response = requests.get(url, params=params)

# Print the status code and response data

print(f"Status Code: {response.status_code}")

print(response.json()) # Print the JSON responseSTEP 4 - Understand the response

Eurostat’s API returns data in JSON-stat format, a standard for multidimensional statistical data. You can save the response to a file and explore its structure:

import requests

import json

# Define the URL endpoint and dataset

base_url = "https://ec.europa.eu/eurostat/api/dissemination/statistics/1.0/data/"

dataset = "TOUR_OCC_ARN2"

url = base_url + dataset

# Define the parameters to add in the URL

params = {

'lang': 'EN',

"time": 2019 # Specify the language as English

}

# Make the GET request and obtain the response

response = requests.get(url, params=params)

# Check the status code and handle the response

if response.status_code == 200:

# Parse the JSON response

data = response.json()

# Generate a JSON file and write the response data into it

with open("eurostat_response.json", "w") as json_file:

json.dump(data, json_file, indent=4) # Save JSON with pretty formatting

print("JSON file 'eurostat_response.json' has been successfully created.")

else:

print(f"Error: Received status code {response.status_code} from the API.")STEP 5 - Transform the response into usable data.

Now that we got the data, we can find a way to save it up into a tabular format (CSV) in order to smooth the process of analyzing it.

import requests

import pandas as pd

# Step 1: Make the GET request to the Eurostat API

base_url = "https://ec.europa.eu/eurostat/api/dissemination/statistics/1.0/data/"

dataset = "TOUR_OCC_ARN2" # Tourist accommodation statistics dataset

url = base_url + dataset

params = {'lang': 'EN'} # Request data in English

# Make the API request

response = requests.get(url, params=params)

# Step 2: Check if the request was successful

if response.status_code == 200:

data = response.json()

# Step 3: Extract the dimensions and metadata

dimensions = data['dimension']

dimension_order = data['id'] # ['geo', 'time', 'unit', 'indic', etc.]

# Extract labels for each dimension dynamically

dimension_labels = {dim: dimensions[dim]['category']['label'] for dim in dimension_order}

# Step 4: Determine the size of each dimension

dimension_sizes = {dim: len(dimensions[dim]['category']['index']) for dim in dimension_order}

# Step 5: Create a mapping for each index to its respective label

# For example, if we have 'geo', 'time', 'unit', and 'indic', map each index to the correct label

index_labels = {

dim: list(dimension_labels[dim].keys())

for dim in dimension_order

}

# Step 6: Create a list of rows for the CSV

rows = []

for key, value in data['value'].items():

# `key` is a string like '123', we need to break it down into the corresponding labels

index = int(key) # Convert string index to integer

# Calculate the indices for each dimension

indices = {}

for dim in reversed(dimension_order):

dim_index = index % dimension_sizes[dim]

indices[dim] = index_labels[dim][dim_index]

index //= dimension_sizes[dim]

# Construct a row with labels from all dimensions

row = {f"{dim.capitalize()} Code": indices[dim] for dim in dimension_order}

row.update({f"{dim.capitalize()} Name": dimension_labels[dim][indices[dim]] for dim in dimension_order})

row["Value (Tourist Accommodations)"] = value

rows.append(row)

# Step 7: Create a DataFrame and save it as CSV

if rows:

df = pd.DataFrame(rows)

csv_filename = "eurostat_tourist_accommodation.csv"

df.to_csv(csv_filename, index=False)

print(f"CSV file '{csv_filename}' has been successfully created.")

else:

print("No valid data to save as CSV.")

else:

print(f"Error: Received status code {response.status_code} from the API.")STEP 6 - Generate a specific view

In our, imagine we just want to keep those records corresponding to Campings, Apartments or Hotels. We can generate a final table with this condition, and obtain a Pandas DataFrame we can work with.

# Check the unique values in the 'Nace_r2 Name' column

set(df["Nace_r2 Name"])

# List of options to filter

options = ['Camping grounds, recreational vehicle parks and trailer parks',

'Holiday and other short-stay accommodation',

'Hotels and similar accommodation']

# Filter the DataFrame based on whether the 'Nace_r2 Name' column values are in the options list

df = df[df["Nace_r2 Name"].isin(options)]

dfBest Practices When Working with APIs

Read the Docs: Always check the official API documentation to understand endpoints and parameters.

Handle Errors: Use conditionals and logging to gracefully handle failed requests.

Respect Rate Limits: Avoid overwhelming the server—check if rate limits apply.

Secure Credentials: If the API requires authentication, never expose your API keys in public code.

🎉 Limited-Time Offer: Celebrate 8,000+ Subscribers!

Woke up today to 8,000 amazing subscribers on DataBites — and I want to say thank you! 🥹

To celebrate, I'm giving 20% off for life to anyone who upgrades to a paid plan this week only. You'll get access to:

📝 Read all my paywalled articles and in-depth guides.

🧩 Access to all my cheatsheets in the Cheatsheet Hub.

💬 Post comments and questions on premium content.

This offer is only available until April 13th, so don’t miss it!

👉🏻 Grab your lifetime discount now

And now… what you have been waiting all along… Here goes our weekly cheatsheet 👇🏻

Conclusion

Eurostat’s API is a powerful gateway to a wealth of structured, high-quality European statistics. By learning how to navigate its structure, query datasets, and interpret responses, you can automate access to critical data for analysis, research, or decision-making—right from your Python scripts.

You can go check the corresponding code in my brand-new DataBites GitHub repository where I’ll share the associated codes for coming code-alongs and projects.

Are you still here? 🧐

👉🏻 I want this newsletter to be useful, so please let me know your feedback!

Before you go, tap the 💚 button at the bottom of this email to show your support—it really helps and means a lot!

Any doubt? Let’s start a conversation! 👇🏻

Want to get more of my content? 🙋🏻♂️

Reach me on:

LinkedIn, X (Twitter), or Threads to get daily posts about Data Science.

My Medium Blog to learn more about Data Science, Machine Learning, and AI.

Just email me at rfeers@gmail.com for any inquiries or to ask for help! 🤓

Remember now that DataBites has an official X (Twitter) account and LinkedIn page. Follow us there to stay updated and help spread the word! 🙌🏻